SPECTRUM ENFORCEMENT

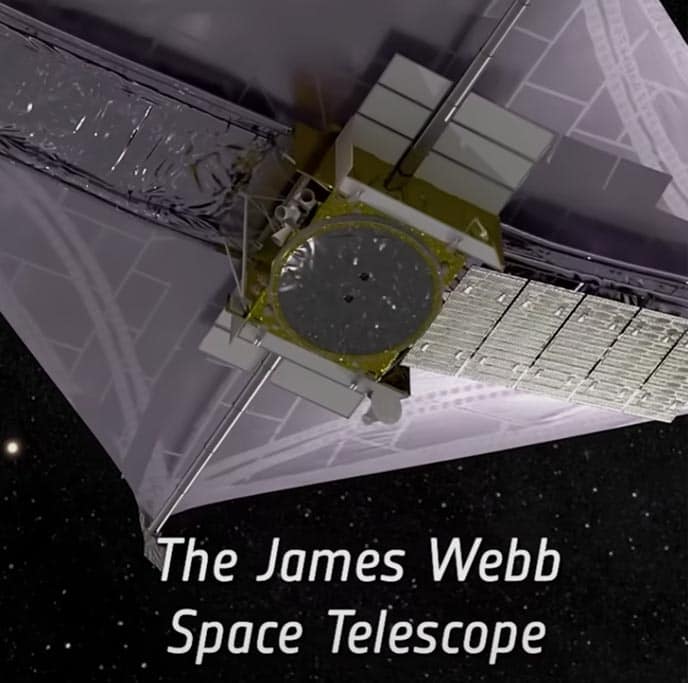

“Spectral Anomaly Detected. 💥

VLS TUBE LANCEERING GESLAAGD! 🛰️⚡🔥”

Fizz (rf fox hunter)

Big Data Must be Culled

Puff Piece: Susan Etlinger is a globally recognized expert in digital strategy, with a focus on artificial intelligence, responsible AI, data and the future of work. In addition to her role at Microsoft, Susan is a senior fellow at the Centre for International Governance Innovation, an independent, non-partisan think tank based in Canada, and a member of the United States Department of State Speaker Program.

Susan’s TED talk, “What Do We Do With All This Big Data?” has been translated into 25 languages and has been viewed more than 1.5 million times. Her research is used in university curricula around the world, and she has been quoted in numerous media outlets including The Wall Street Journal, The Atlantic, The New York Times and BBC. Susan holds a Bachelor of Arts in Rhetoric from the University of California at Berkeley.

Esoteric Influencer Marketing

Puff Piece: Shirli Zelcer is the Chief Data and Technology Officer at dentsu. With over 20 years of experience in analytics and insights, business advisory and organizational design, artificial intelligence and data science, and cloud and marketing technologies, Shirli is at the forefront of innovation ranging from ethical AI and advancements in cloud engineering to future-proofing data literate organizations around zero-party data. (I write this as an act Journalist freedom to document overreach by Rogue Data Scientist gone Rampant )

Let’s work on your project.

contact me