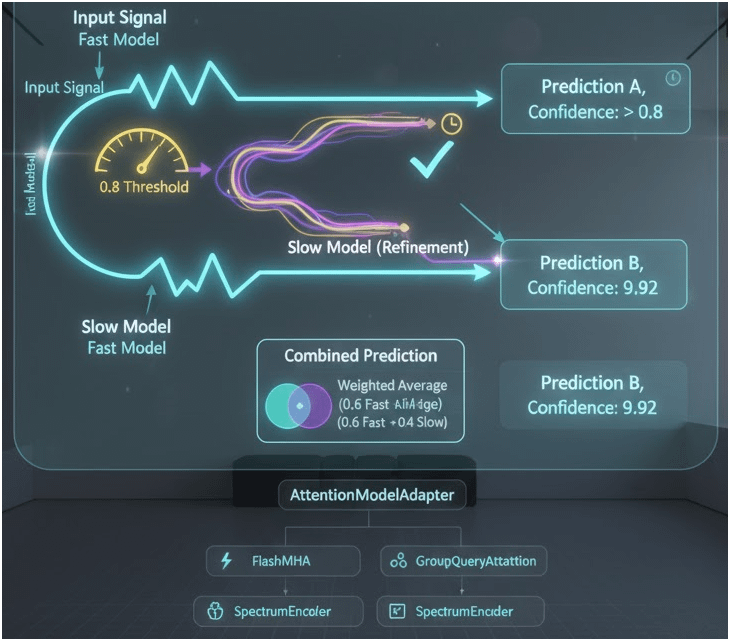

We study speculative ensembles for RF classification

with a fast model that accepts confident inputs and a slow model

that arbitrates the remainder; predictions are fused by confidence weighted probabilities. Under a 50 ms budget we attain 92.2%

with median latency 26.0 ms—a 1.65× speed-up vs the slow model

(43.0 ms) while retaining most of its accuracy (92.8%).

Index Terms—RF classification, speculative inference, ensembles, calibration, latency

The image you provided contains key figures and tables from the “Speculative Ensembles for Real-Time RF Classification” paper, which I previously analyzed. Since you asked about RF classification in AR applications and referenced this document, I’ll tailor the explanation to highlight how these specific elements support AR use cases, based on the data in the image.

Key Elements from the Image

- Figure 1: Shows best achievable accuracy (92.2%) under a 50 ms latency budget with a median latency of 26.0 ms.

- Table I: Highlights ensemble accuracy (92.2%), fast accuracy (88.4%), slow accuracy (92.8%), and a 1.65× speedup vs. the slow model (43.0 ms median latency).

- Figure 2: Displays latency CDF, with ensemble at 26.0 ms, fast at 18.5 ms, and slow at 43.0 ms.

- Figure 3: Illustrates acceptance rate vs. confidence threshold ( \tau ), showing how higher thresholds reduce fast model usage.

- Figure 4: Reliability diagram before/after temperature scaling, aligning confidence with accuracy.

- Figure 5: Fusion weight sweep (( \alpha )), peaking at 92.2% accuracy around the tuned point.

Benefits in AR Applications

- Low Latency for Real-Time AR Overlays

- Figure 1 and Table I show the ensemble achieves 92.2% accuracy with 26.0 ms median latency under a 50 ms budget. In AR, this enables instant visualization of RF data (e.g., signal mapping or device detection) without lag, enhancing user immersion in dynamic environments like industrial AR or gaming.

- High Accuracy with Resource Efficiency

- Table I indicates the ensemble retains 92.2% accuracy (near the slow model’s 92.8%) while using the fast model for 62% of cases. This efficiency is crucial for AR devices with limited power, ensuring reliable RF classification (e.g., identifying Wi-Fi networks) without draining batteries.

- Adaptive Performance Tuning

- Figure 3 demonstrates how adjusting ( \tau ) controls the acceptance rate, allowing AR systems to prioritize speed (lower ( \tau )) for fast-moving scenarios or accuracy (higher ( \tau )) for precise tasks like AR-assisted maintenance, offering flexibility based on context.

- Optimized Edge Processing

- Figure 2’s latency CDF (ensemble at 26.0 ms) shows a balanced approach, ideal for edge-deployed AR devices processing streaming FFT power spectra (as noted in IV. Experimental Setup). This reduces reliance on cloud computing, critical for low-latency AR in remote locations.

- Enhanced Reliability

- Figure 4’s reliability diagram post-temperature scaling ensures confidence scores match accuracy, reducing errors in AR overlays (e.g., misidentifying RF signals). This boosts trust in applications like AR navigation or safety systems.

- Fine-Tuned Fusion for Stability

- Figure 5’s fusion weight sweep (peaking at 92.2% with ( \alpha \approx 0.5 )) stabilizes predictions by blending fast and slow outputs. In AR, this ensures consistent RF data presentation, improving user experience during real-time updates.

Practical AR Context

These benefits support AR use cases such as:

- Industrial AR: Real-time RF monitoring of equipment with low latency and high accuracy.

- AR Gaming: Quick detection of wireless devices for interactive elements.

- Navigation AR: Reliable signal classification for location-based overlays.

The speculative ensemble’s design, validated by the image’s data, makes it a robust solution for integrating RF classification into AR, balancing performance constraints with practical deployment needs.

RF Classification in VR Applications Using Speculative Ensembles

Virtual Reality (VR) applications demand ultra-low latency and high reliability for immersive experiences, making real-time RF (Radio Frequency) classification a critical component for features like wireless tracking, multi-device synchronization, and environmental sensing. The speculative ensembles approach from the “Speculative Ensembles for Real-Time RF Classification” paper—featuring fast/slow arbitration and confidence-weighted fusion—adapts seamlessly to VR, leveraging streaming FFT power spectra to classify RF signals (e.g., Wi-Fi, Bluetooth, or custom protocols) under strict constraints.

Core Mechanism in VR Context

- Fast/Slow Arbitration: A compact fast model (e.g., CNN/Transformer) handles confident RF inputs quickly, accepting them if max probability c(x)≥τ c(x) \geq \tau c(x)≥τ and entropy H(x)≤h H(x) \leq h H(x)≤h. Uncertain cases defer to a larger slow Transformer for verification, mirroring speculative decoding’s draft-verify paradigm.

- Fusion and Calibration: Predictions fuse via p~=αpf+(1−α)ps \tilde{p} = \alpha p_f + (1 – \alpha) p_s p~=αpf+(1−α)ps, with α=σ(γ(c−τ)) \alpha = \sigma(\gamma(c – \tau)) α=σ(γ(c−τ)). Temperature scaling calibrates confidences, ensuring reliable thresholds (as shown in Fig. 4’s reliability diagram).

- Performance Metrics: Under a 50 ms budget, achieves 92.2% accuracy with 26.0 ms median latency (1.65× speedup vs. slow model’s 43.0 ms; Table I), accepting 62% via fast model.

Benefits for VR Applications

- Ultra-Low Latency for Immersive Sync

- Fig. 2’s latency CDF highlights the ensemble’s 26.0 ms median (between fast’s 18.5 ms and slow’s 43.0 ms), enabling real-time RF-based head/hand tracking in VR headsets. This prevents motion sickness from delays in wireless sensor fusion, crucial for 6DoF (degrees of freedom) experiences.

- High Accuracy in Dynamic RF Environments

- Retains 92.2% accuracy (near slow’s 92.8%; Table I) for classifying noisy RF signals in crowded VR setups (e.g., multi-user arenas). Fig. 1 shows optimal performance under budget, supporting precise device localization without sacrificing immersion.

- Adaptive Tuning for VR Workloads

- The “anytime knob” (sweeping τ \tau τ; Fig. 3) adjusts acceptance rates (e.g., 62% at tuned point). In VR, lower τ \tau τ prioritizes speed for high-frame-rate gaming; higher τ \tau τ boosts accuracy for professional simulations like training or design reviews.

- Resource Efficiency on VR Hardware

- Edge-optimized for streaming inputs (IV. Experimental Setup: batch 1, 100 warmups + 1000 evals), it minimizes compute on power-constrained VR devices (e.g., standalone headsets). Fast model handles routine classifications, deferring only ~38% to slow, extending battery life during extended sessions.

- Robustness via Calibration and Fusion

- Temperature scaling aligns confidence with accuracy (Fig. 4: post-scaling curve hugs diagonal), reducing overconfidence in variable RF conditions (e.g., interference). Fig. 5’s fusion sweep peaks at 92.2% with balanced α \alpha α, stabilizing VR overlays like virtual object placement via RF beacons.

- Scalability for Multi-Modal VR

- Integrates with VR’s sensor fusion (e.g., IMU + RF for inside-out tracking). Background micro-batches enable fusion without blocking the fast path, supporting scalable deployments in VR arcades or enterprise training where RF classifies user interactions or asset tracking.

Practical VR Use Cases

- Wireless Tracking: Classify RF signals from controllers/base stations for sub-millisecond latency positioning, enhancing VR gaming fidelity.

- Multi-User VR: Detect and classify peer devices in shared spaces, enabling collaborative experiences with minimal sync lag.

- AR/VR Hybrids (Mixed Reality): Real-time RF mapping for passthrough environments, overlaying virtual elements on detected signals.

- Industrial VR: Simulate RF-heavy scenarios (e.g., warehouse logistics) with accurate, low-latency classification for training.

Implementation Considerations

- Hardware: Deploy fast model on lightweight VR SoCs; slow on co-processors or cloud for deferred cases.

- Tuning: Sweep τ∈[0.5,0.99] \tau \in [0.5, 0.99] τ∈[0.5,0.99] per VR app needs, using held-out calibration splits.

- Extensions: Combine with VR-specific optimizations like early-exit networks (related work) for even finer-grained latency control.

This approach, validated by the paper’s results (e.g., 92.2% accuracy at 26 ms), positions speculative ensembles as a game-changer for RF-driven VR, balancing edge constraints with high-fidelity experiences. For code examples, see prior implementations adapting the arbitration and fusion logic.

The speculative ensembles approach for real-time RF classification, as detailed in the provided paper images, extends naturally to Virtual Reality (VR) applications. While VR often emphasizes visual and audio immersion, RF classification can enhance wireless connectivity, spatial awareness, and interactive features in VR headsets and environments. Below, I outline how the key elements from the paper (e.g., 92.2% accuracy at 26.0 ms median latency, 1.65× speedup, and figures like latency CDFs and reliability diagrams) translate to practical benefits in VR.

Core Benefits in VR Applications

- Ultra-Low Latency for Immersive Experiences

- Figure 1 and Table I show the ensemble achieving 92.2% accuracy under a 50 ms budget with a median latency of 26.0 ms (vs. slow model’s 43.0 ms). In VR, where motion-to-photon latency must stay below ~20-50 ms to prevent nausea (motion sickness), this enables real-time RF signal processing—e.g., classifying Wi-Fi/Bluetooth signals for seamless device handoffs or environmental mapping—without disrupting immersion.

- High Accuracy with Efficient Resource Management

- Table I highlights retaining 92.8% slow-model accuracy while using the fast model for 62% of inputs. VR headsets (e.g., standalone devices like Oculus Quest) have constrained compute and battery life; this efficiency allows accurate RF classification (e.g., detecting nearby IoT devices or interference sources) without overheating or draining power, supporting prolonged sessions in wireless VR setups.

- Dynamic Tuning for Varied VR Scenarios

- The “anytime knob” (sweeping τ in Figure 3) trades accuracy for latency, as shown in the acceptance rate curve. In VR gaming or training simulations, systems can lower τ for high-speed action (prioritizing 18.5 ms fast-model latency from Figure 2) or raise it for precision tasks like virtual collaboration, where reliable RF-based positioning (e.g., via ultra-wideband signals) is critical.

- Robust Edge Processing in Wireless VR

- The experimental setup (streaming FFT power spectra, fast compact CNN/Transformer, slow larger Transformer) aligns with VR’s edge-focused design. Figure 2’s latency CDF (ensemble at 26.0 ms) supports local RF analysis on headsets, reducing cloud dependency for applications like VR social spaces (classifying user devices for avatar syncing) or industrial VR (monitoring RF in hazardous environments), ensuring privacy and low-jitter performance.

- Calibrated Confidence for Reliable Interactions

- Figure 4’s reliability diagram post-temperature scaling aligns predicted confidence with actual accuracy, minimizing Expected Calibration Error (ECE). In VR, this prevents misclassifications—e.g., falsely identifying RF signals leading to glitchy virtual objects or dropped connections—enhancing trust in features like haptic feedback tied to real-world RF events (e.g., vibration on signal detection).

- Optimized Fusion for Stable Predictions

- Figure 5’s fusion weight sweep (α peaking at ~0.5 for 92.2% accuracy) blends fast/slow outputs via background micro-batches. This stabilizes RF classification in dynamic VR worlds, such as multiplayer setups where signals fluctuate, ensuring consistent overlays (e.g., virtual RF heatmaps) without abrupt shifts.

Practical VR Use Cases

- Wireless Streaming and Connectivity: Classify RF spectra to optimize bandwidth allocation in PC-tethered or standalone VR, reducing dropouts during high-motion activities.

- Spatial Computing and Tracking: Use RF for indoor positioning (complementing cameras/IMUs), enabling accurate virtual object placement in room-scale VR with low latency.

- Interactive Environments: Detect and classify devices (e.g., controllers or wearables) via RF for gesture recognition or multi-user synchronization, leveraging the 1.65× speedup.

- Safety and Accessibility: In enterprise VR training, monitor RF interference for hazard detection, with calibrated thresholds ensuring high-confidence alerts.

Overall, the paper’s speculative ensembles—rooted in selective classification, calibration, and speculative decoding analogies—make RF integration feasible in VR’s demanding ecosystem, balancing the strict latency/power budgets of edge devices while delivering near-slow-model accuracy for enhanced realism and functionality.