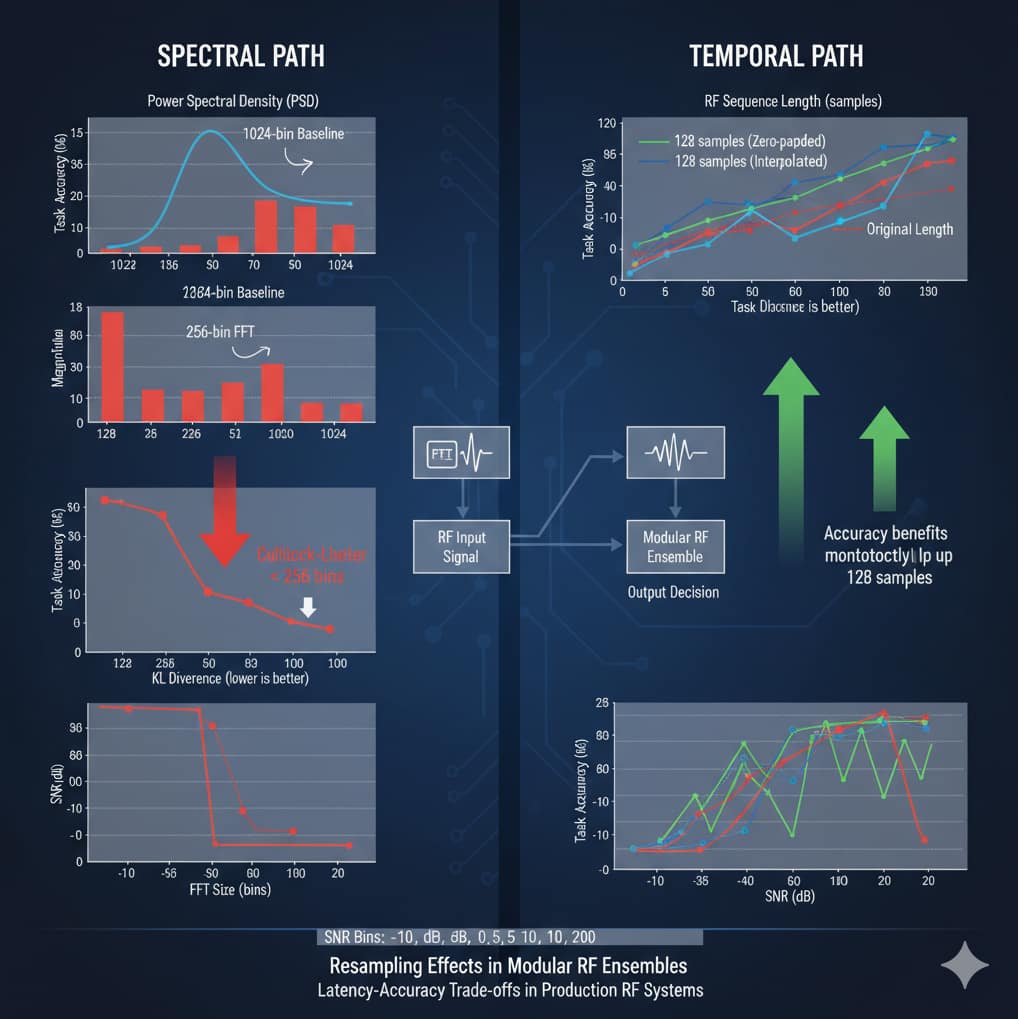

We quantify distortion introduced by downsampling

and interpolation in a modular RF ensemble classifier. For the

spectral path, we compare power spectral densities (PSDs) at

varying FFT sizes against a 1024-bin baseline using KullbackLeibler (KL) divergence. For the temporal path, we study task

accuracy as a function of sequence length with zero-padding

and interpolation to 128 samples. Across six SNR bins from

−10 dB to 20 dB, we demonstrate critical thresholds: spectral

performance degrades sharply below 256 bins, while temporal

accuracy benefits monotonically from longer sequences up to

128 samples. Our reproducible measurement harness provides

policy guidance for latency-accuracy trade-offs in production RF

systems.