In 2025, voice deepfakes aren’t just sci-fi—they’re a real threat. From scammers impersonating loved ones to manipulate victims into sending money, to deepfakes disrupting elections or financial systems, synthetic audio is getting scarily good. Traditional detection methods often require massive datasets and struggle with new voices or accents, leaving us vulnerable. But what if we could spot fakes with just a handful of audio samples, and do it reliably?

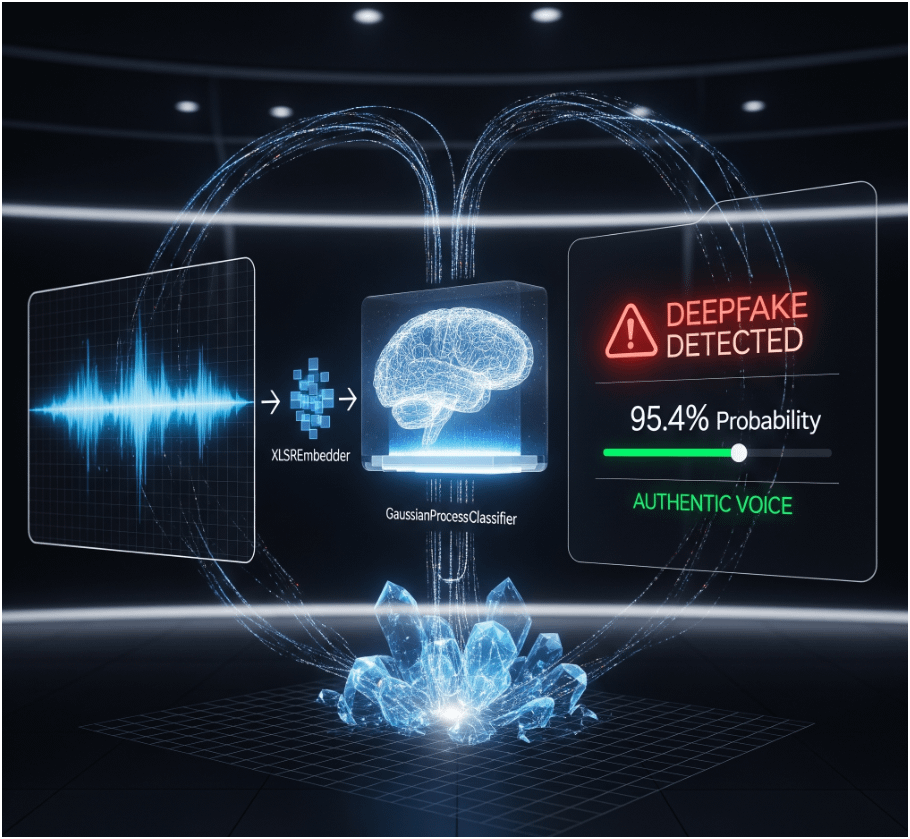

Enter Voice Clone Guard, a groundbreaking few-shot deepfake detection system detailed in a new paper by Benjamin J. Gilbert from the College of the Mainland. Titled “Voice Clone Guard: Few-Shot Deepfake Detection with XLS-R Embeddings and Gaussian Process Calibration,” this research combines cutting-edge AI techniques to achieve top-tier accuracy, even in low-data scenarios. Let’s dive into what makes this system a potential game-changer for audio security.

The Deepfake Dilemma: Why We Need Better Tools

Voice cloning tech, powered by models like Tacotron or VITS, can mimic anyone’s speech with eerie precision. As Gilbert notes in his introduction, this poses risks to everything from fraud prevention to legal evidence. Older systems rely on basic features like Mel-frequency cepstral coefficients (MFCCs) and simple classifiers, but they falter against unseen attacks and don’t provide reliable confidence scores—meaning lots of false alarms or missed detections.

Gilbert’s work addresses these gaps head-on, focusing on few-shot learning (training with minimal examples) and probability calibration (ensuring the system’s confidence matches reality). This is crucial for real-world apps where you can’t always gather thousands of samples per speaker.

How Voice Clone Guard Works: A Smart Blend of AI Tech

At its core, Voice Clone Guard uses two powerful components:

- XLS-R Embeddings for Feature Extraction:

- Built on Facebook’s Wav2Vec2-XLS-R-53 model, a self-supervised speech learner trained on 53 languages. It turns raw audio into rich, 1024-dimensional embeddings that capture contextual nuances.

- Gilbert freezes most layers for efficiency, fine-tuning only the last one to avoid overfitting in few-shot setups. This multilingual backbone makes the system robust across languages like English, Mandarin, or Arabic.

- Gaussian Process (GP) for Classification and Calibration:

- GPs are Bayesian models that excel in low-data regimes, providing not just predictions but also uncertainty estimates.

- Using a Radial Basis Function (RBF) kernel with an optimized length scale (ℓ=1.5), the system classifies audio as real or fake via Laplace approximation for probabilities.

- This setup ensures well-calibrated outputs—meaning if the model says it’s 90% sure something’s fake, it really is right about 90% of the time.

The training is straightforward: Feed in a few examples (as low as 1-4 per class), extract embeddings, and let the GP adapt. No need for massive GPUs or endless data.

Impressive Results: Outperforming the Competition

Gilbert tested Voice Clone Guard on a massive dataset of 12,847 utterances from 427 speakers across 8 languages, including synthetic fakes from TTS systems, real recordings from LibriTTS and VoxCeleb, and adversarial tweaks like noise or compression. Results were averaged over 10 splits for reliability, with additional validation on benchmarks like ASVspoof 2019/2021.

Here’s a snapshot of the performance compared to baselines:

| Method | AUC | EER (%) | ECE ↓ |

|---|---|---|---|

| MFCC + Cosine | 0.782 | 18.5 | 0.127 |

| Spectral + LogReg | 0.834 | 12.8 | 0.098 |

| Raw Audio + CNN | 0.887 | 9.7 | 0.089 |

| LCNN Baseline | 0.912 | 7.4 | 0.074 |

| HuBERT + FineTune | 0.923 | 6.8 | 0.061 |

| XLS-R + GP | 0.956 | 4.2 | 0.032 |

- Discrimination Power: 95.6% AUC and 4.2% EER crush traditional methods, with a 67% relative EER improvement over the best spectral baseline.

- Few-Shot Magic: Achieves 85.2% accuracy with just 4 examples per class, and 72% with only 1—perfect for quick deployment against new threats.

- Calibration Edge: ECE of 0.032 means ultra-reliable confidence scores, far better than overconfident baselines (e.g., MFCC’s 0.127).

- Robustness: Maintains >91% AUC under real-world distortions like MP3 compression. Cross-lingual tests show solid performance, though tonal languages like Mandarin see slight drops (suggesting room for tweaks).

- ASVspoof Validation: Holds up well on standard benchmarks, with 0.889-0.902 AUC, proving generalizability.

Ablations confirm key choices: The ℓ=1.5 length scale balances flexibility, and partial freezing optimizes efficiency without sacrificing much performance.

Why This Matters: Practical, Ethical, and Deployable

Voice Clone Guard isn’t just academically impressive—it’s built for the real world:

- Efficiency: Trains in <20 seconds on a CPU with 16 samples; infers in 50ms per clip. Memory? Just 1.2GB for the model.

- Open-Source: The paper includes code snippets in PyTorch and scikit-learn, making it accessible for developers to build on.

- Multilingual Reach: Handles diverse languages, addressing global needs.

Gilbert thoughtfully discusses ethics: While biases in SSL models cause minor disparities (e.g., 1.8% EER gap for tonal languages), the system promotes fairness audits. It also flags potential dual-use risks, like aiding censorship, and calls for transparent policies. Limitations include vulnerability to adaptive attacks and edge-device constraints, but these are flagged for future work.

Looking Ahead: Future Directions

The paper outlines exciting extensions:

- Multimodal Fusion: Combine with video analysis for full deepfake detection.

- Adaptive Learning: Evolve online against new attacks.

- Adversarial Robustness: Test against smart hackers.

- Deployment Studies: Real-world trials in noisy environments.

In a world where AI voices can fool even experts, Voice Clone Guard offers a beacon of hope. By leveraging self-supervised embeddings and Bayesian uncertainty, Gilbert has crafted a system that’s accurate, efficient, and trustworthy. If you’re in cybersecurity, AI ethics, or just worried about deepfakes, this research is worth a read—it’s a step toward safer audio authentication.

Check out the full paper for details (contact the author at bgilbert2@com.edu). Who knows? This could be the foundation for the next big anti-deepfake tool. What do you think—ready to clone-proof your calls? Drop your thoughts below!